Disclaimer: This post may contain affiliate links, meaning we get a small commission if you make a purchase through our links, at no cost to you. For more information, please visit our Disclaimer Page.

Between bits, bytes, and the numerous prefixes that inflate their quantities, there are many different measures of data transfer speeds. Inevitably, lay users find it extremely difficult to make sense of the numbers service providers throw at them. Why, for instance, do internet service providers state network speeds in Mbps?

Table of Contents

Why ISPs Use Bits Instead of Bytes

ISPs use bits instead of bytes because the bit is the smallest measurable unit of information. Networks transfer bytes of data one bit at a time, so the number of megabytes of information transferred over a network per second provides the most accurate measure of its speed.

Let’s take a closer look at the difference between bits and bytes, how they’re used in different areas of computing, and what different speeds imply for networks and users.

Bits vs. Bytes Explained

Within the framework of binary operating systems, a bit is the smallest unit of information. It’s a single character that can exist in one of the two states: on or off, zero or one (or any other way you choose to state it). Switches, logic, and data exist in one of the two states.

A byte is a larger unit of measurement consisting of 8 bits. The reasoning is that eight individual binary characters are necessary to encode a single character of text. Since most information was initially stored in text form, the measure worked as a guide to quantities of information.

Kilo, Mega, Giga, Tetra, and Peta Bits and Bytes

Only using bits and bytes to quantify data produced large and unwieldy numbers. So, engineers used prefixes to refer to larger agglomerations of bits and bytes. Attached to the unit of measure, these prefixes worked as shorthand for larger quantities in the same way that terms like “thousand” or “million” do.

Most people are familiar with this terminology today:

- Kilo: 10^3 or 1,000

- Mega: 10^6 or 1,000,000

- Giga: 10^9 or 1,000,000,000

- Tera: 10^12 or 1,000,000,000,000

- Peta: 10^15 or 1,000,000,000,000,000

A megabit (equal to 1,000,000 bits) or a megabyte (equivalent to 1,000,000 bytes) is a much more convenient measure of data size than a bit or a byte. Such shorthand is invaluable when referring to the large volumes of information users regularly transact in today.

Disks and Networks Measure Space and Speed Differently

Why not refer to network speeds in megabytes? After all, at 1,000,000 bytes or 8,000,000 bits, a megabyte is an even larger quantity of data than a megabit. Shouldn’t it make for a more convenient shorthand?

Moreover, megabits and megabytes can be easily stated in terms of each other because they measure similar attributes.

Since 1 byte = 8 bits, a bit = ⅛ or 0.125 bytes. So, 1 bit per second (bps) can also be stated as 0.125 bytes per second (B/s). For the same reason, 1 megabit per second (Mbps) = 0.125 megabytes per second (MB/s).

It turns out that both measures find very different practical applications in the real world.

Manufacturers of storage devices mostly use megabytes (and gigabytes) to refer to the capacity of their disks. Megabytes per second (or gigabytes per second) refer to the read-write speeds of these disks.

On the other hand, megabits per second (and gigabits per second) are usually used by internet service providers to refer to the speeds and bandwidth of the networks they provide access to.

Data Is Transferred Serially Over Networks

But why do separate systems for similar measures exist in the first place? Aren’t data and transfer speeds the same attributes, whether referring to a disk or a network?

It turns out that data transfers differently depending on whether it’s moving across a network or internally within the different drives on a computer. This crucial difference explains why network speeds are measured differently than disk read-write speeds.

When a computer transfers information internally, it does so using parallel strings of information. For instance, a single piece of information made up of 5 binary digits is communicated simultaneously across 5 channels.

By contrast, the same information transmitted across a network moves serially. That is, the 5 binary digits are transferred across the network one digit at a time before being unpacked at the other end of the network, one digit at a time.

Although data is always transferred serially across a network, it doesn’t need to be transferred in the order the original information is coded in. In other words, data can be transmitted serially across a network in any order. It will be received in packets, one at a time, at the other end of the network, and rearranged in its original form.

This serial nature of the transfer of data across networks explains why agglomerations of bits per second are a more accurate representation of network speeds.

Bits Provide the Most Precise Measure of Network Speeds

As the smallest measure of data, bits also convey the most precise information on the quantity of data transferred over a network.

Unlike a byte, a bit cannot be broken down further. This means that part of a byte can be transferred over an interrupted network connection, but a bit can only be entirely transferred or not at all.

Along with the greater accuracy of their description, this precision explains why bits, rather than bytes, are used to describe network speeds. However, these measures also need to be stated in durational terms to work as a measure of speed.

Network Speeds Are Stated in Bits per Second

Since speed is a function of time, network speeds are measured in bits per second. And since modern networks transfer millions of bits each second, internet service providers use megabits per second or gigabits per second to rate the speeds of their networks.

Understand that megabits per second (Mbps) are different from megabytes per second (MB/s). As we’ve seen, they don’t even serve the same functions.

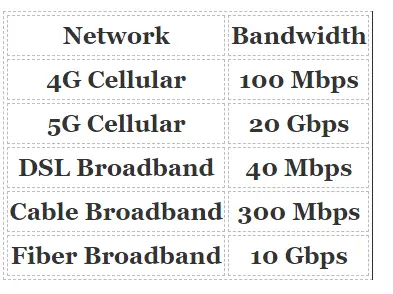

This table shows the maximum bandwidth for the most commonly used networks today.

Bandwidth is a measure of the maximum bits per second a network can transfer. It’s influenced by two main factors: speed and latency.

Actual speeds will vary from network to network and from moment to moment on the same network. To test the current speed of your network, you can head over to one of the many free web applications. It only takes minutes. Some platforms can even do it in seconds.

Internet Speed Requirements To Access Different Services

While the figures in the earlier section give you an idea about network speeds, they don’t convey how much speed you actually need.

For instance, if you use the internet to browse and send emails, you’ll have very different connectivity requirements for someone who plays multi-player games online.

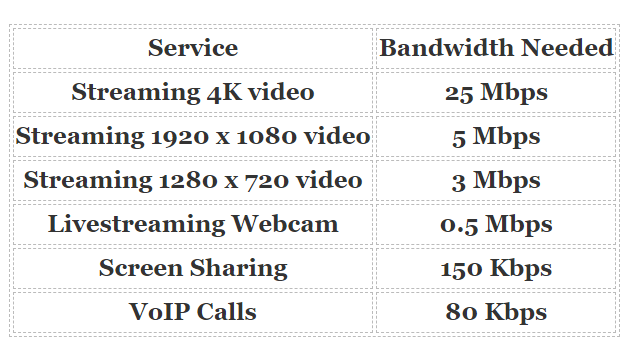

For an idea of the bandwidth requirements for some of the most commonly used services today, consult the following table.

As you can see, the bandwidth requirement varies quite widely from task to task.

By comparing the bandwidth requirements for the different tasks you perform on your computer regularly to your network’s internet speeds, you can determine if your connection speed is sufficient for your current needs.

You may find that you don’t need such high network speeds for the tasks you do. In such a case, you can downgrade to a slower network and save yourself some money.

What Are Bytes Used To Measure?

We’ve seen that bits are used to measure network speeds. So what exactly do bytes measure?

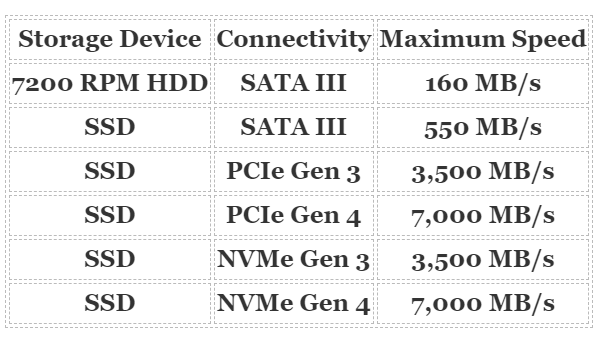

Bytes measure data transfer speeds between storage devices. Because disks read and write information in bytes, disk capacity is usually referred to by manufacturers in terms of agglomerations of bytes. For the same reasons, MB/s is used to denote disk read and write speeds.

The following table lists transfer speeds for the most common types of storage devices today. Since these speeds depend on the interface type used to connect the disks to your computer, these have also been specifically listed.

Final Thoughts

ISPs measure internet speed in megabits because networks transfer data serially, one bit at a time. Calculating speeds this way offers the most accurate and precise measurement of the information transferred over a network each second.